As Artificial Intelligence (AI) technology continues to advance at an unprecedented pace, concerns over its ethical implications are growing exponentially. The development of AI systems has become increasingly complex, with many applications ranging from enhancing productivity in industries such as healthcare and finance, to powering autonomous vehicles that can interact with humans in a highly nuanced manner.

The question remains: who bears the responsibility for ensuring that AI is developed in an ethical manner? Is it the developers themselves, the corporations that commission their development, governments, or perhaps a collective effort from all parties involved?

One of the primary concerns surrounding the ethics of AI is its potential impact on employment. As AI systems become increasingly sophisticated, they are capable of automating tasks that were previously performed by humans. This has led to fears that widespread adoption of AI could result in significant job displacement.

However, there is a growing recognition that responsible AI development requires not only technical expertise but also social and emotional intelligence. Developers must be able to consider the potential consequences of their creations on individuals, communities, and society as a whole.

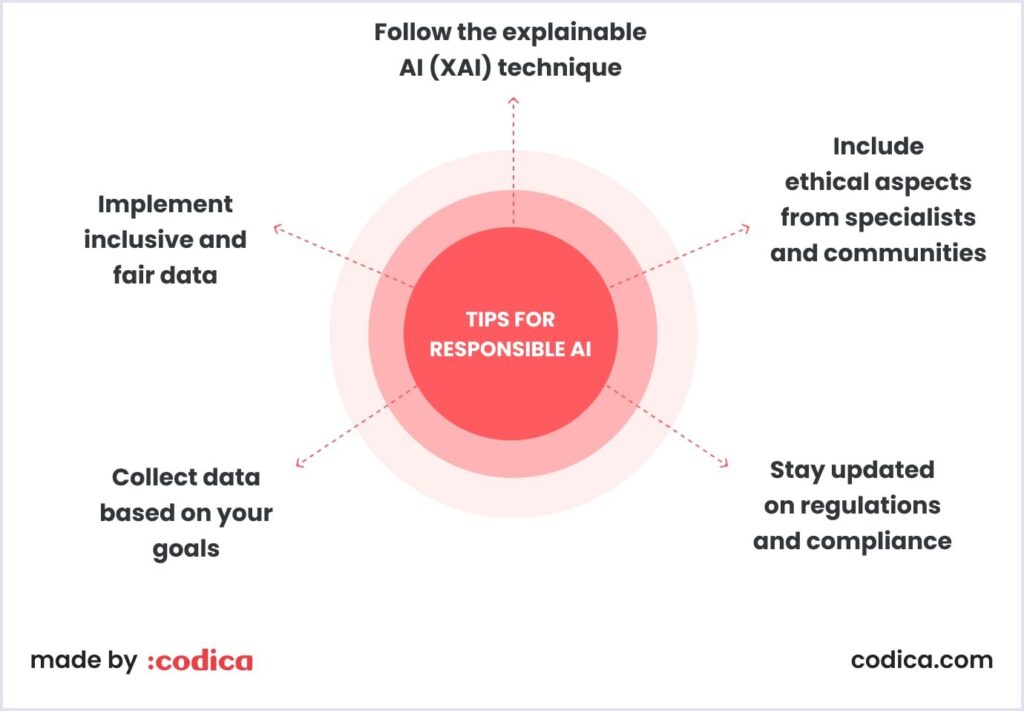

In order to ensure accountability for the ethical development of AI, several steps can be taken. Firstly, regulatory bodies must establish clear guidelines and standards for the development and deployment of AI systems. This could include requirements for transparency in AI decision-making processes, as well as robust safeguards against bias and discrimination.

Furthermore, corporations that commission the development of AI systems must take responsibility for their use and distribution. This includes ensuring that employees are aware of the potential risks associated with AI-powered automation, as well as providing support and retraining programs to help those who may be displaced by these changes.

Additionally, governments can play a critical role in promoting responsible AI development by investing in research and development initiatives focused on developing more transparent and accountable AI systems. This could include funding for projects that aim to develop more human-centered AI design principles, as well as establishing national standards for AI safety and security.

Ultimately, the development of ethical AI requires a multifaceted approach that involves the collaboration of developers, corporations, governments, and civil society. By working together, we can ensure that the benefits of AI are realized while minimizing its risks and negative consequences.