As Artificial Intelligence (AI) technology continues to advance at an unprecedented pace, a growing concern has emerged regarding its potential impact on society. One of the most pressing questions that arises is: who should be responsible for making decisions when it comes to AI decision-making? As AI systems become increasingly sophisticated and autonomous, the ability to ensure accountability and make ethical choices becomes a daunting task.

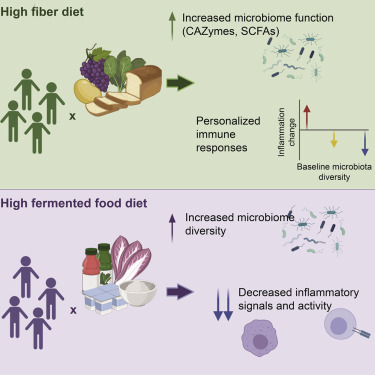

The development of AI has led to numerous benefits, including increased efficiency, improved accuracy, and enhanced decision-making capabilities. However, as AI systems take on more complex tasks, they also require careful consideration of their potential consequences. For instance, AI-powered surveillance systems have raised concerns about mass monitoring, while self-driving cars raise questions about liability in the event of an accident.

Currently, there is no universally accepted framework for addressing these concerns and ensuring accountability. Some argue that corporations and governments should take responsibility for developing and deploying AI systems, as they are often the primary beneficiaries of their benefits. Others contend that AI development should be governed by a global regulatory body or industry-led initiative to establish standardized guidelines and best practices.

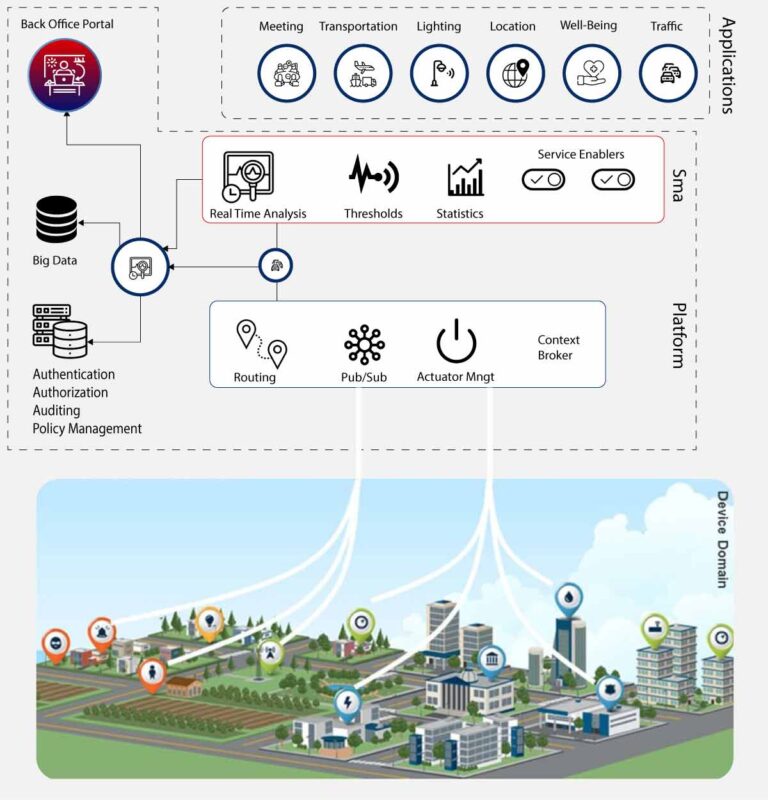

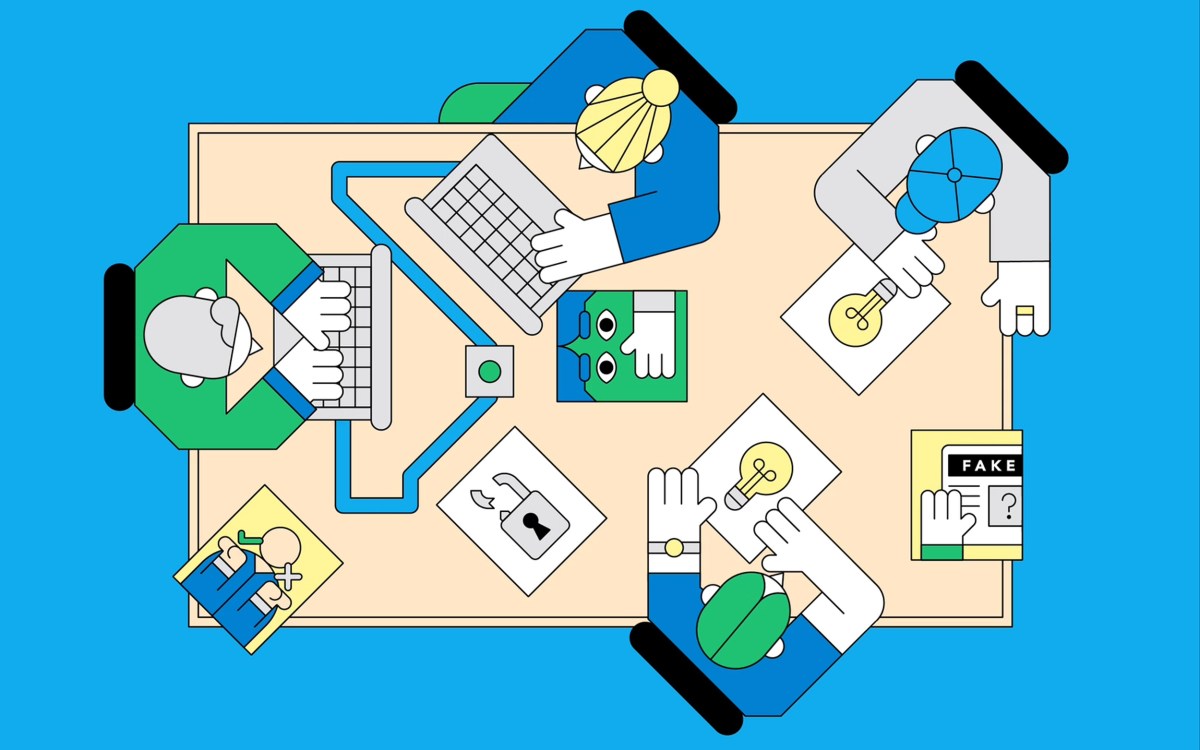

One potential solution is to create a hybrid model, where multiple stakeholders work together to ensure accountability. This could involve governments establishing regulations and standards for AI development, while also providing incentives for corporations to prioritize responsible AI design. Additionally, academia and research institutions can play a crucial role in developing new algorithms and techniques that prioritize transparency, explainability, and fairness.

Another critical aspect of ensuring accountability is the need for diverse representation on committees and panels involved in AI decision-making. This could include experts from various fields, such as ethics, law, sociology, and engineering. Moreover, there should be mechanisms in place to address bias and ensure that AI systems are designed with fairness and equity in mind.

Implementing robust auditing and testing protocols is also essential for ensuring accountability. Regular assessments of AI systems’ performance and decision-making processes can help identify potential flaws or biases. Furthermore, transparency about data sources and algorithms used in AI systems should be a fundamental aspect of responsible development.

Ultimately, the future of AI depends on our ability to strike a balance between technological advancements and social responsibility. As we move forward with AI development, it is crucial that we prioritize accountability, fairness, and transparency. By working together across industries and sectors, we can ensure that AI systems serve humanity’s best interests and contribute positively to society.

The question of who should be responsible for making decisions when it comes to AI decision-making remains a pressing concern. However, by exploring potential solutions, such as hybrid models of governance, diverse representation, auditing protocols, and transparency, we can begin to address these concerns and create a more accountable and responsible AI landscape.